AutoGen: Enabling Next-Gen LLM Applications via Multi-Agent Conversation

AutoGen is a flexible, open-source multi-agent framework enabling modular, conversable LLM-based applications through programmable agent interactions.

1. Paper Metadata & One-Line Summary

- Paper Title: AutoGen: Enabling Next-Gen LLM Applications via Multi-Agent Conversation

- ArXiv ID / DOI: arXiv:2308.08155v2

- Authors / Institution: Qingyun Wu, Gagan Bansal, Jieyu Zhang, et al. / Microsoft Research, Penn State, University of Washington, Xidian University

- Published Date: October 3, 2023

- Link: https://arxiv.org/abs/2308.08155

2. Core Idea & Innovation

- Problem Addressed: Simplifying the development and execution of complex LLM workflows that require coordination among multiple agents, tools, and possibly humans.

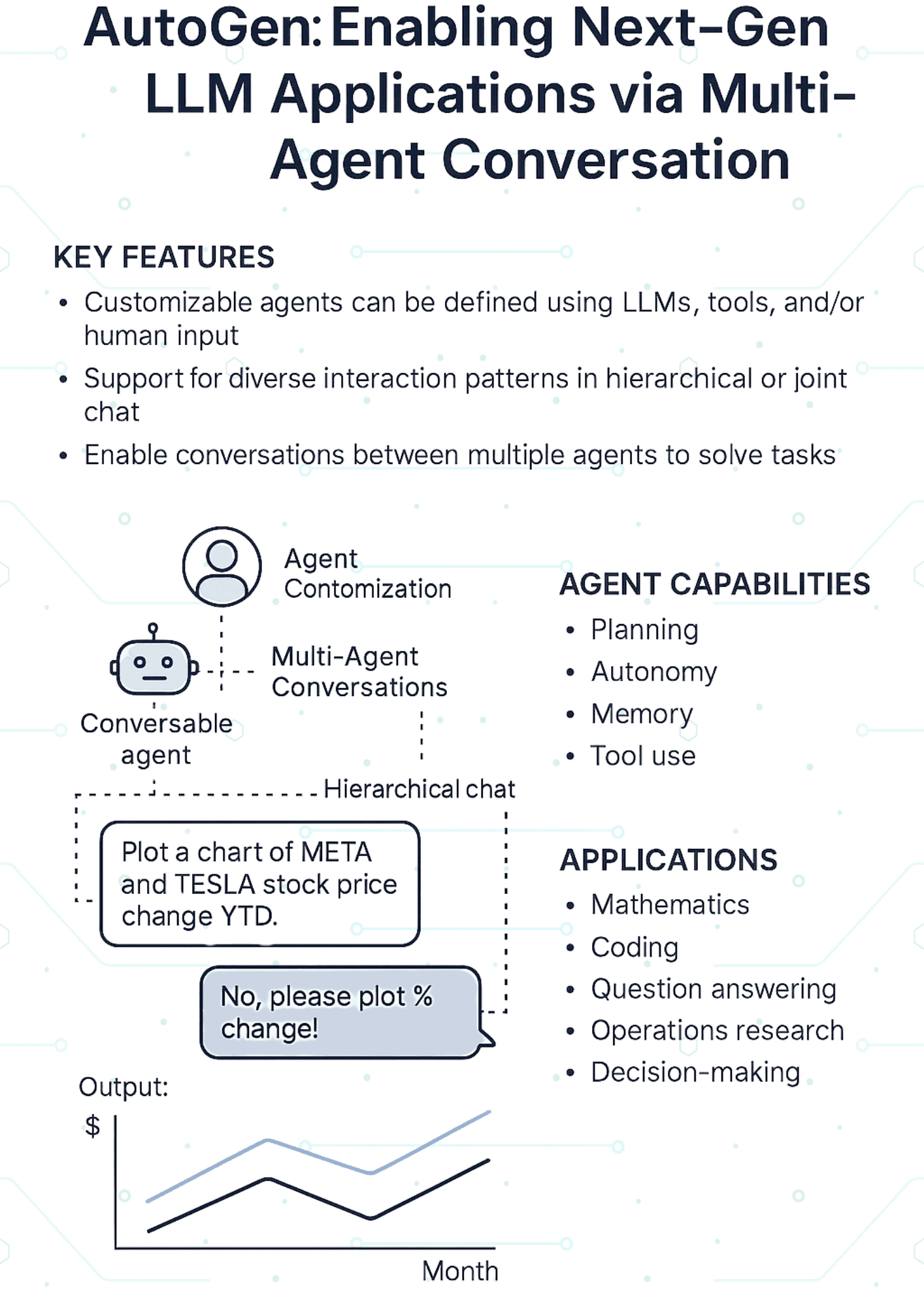

- What’s New or Disruptive?: Introduction of "conversable agents" and "conversation programming" to unify and automate multi-agent interactions in LLM applications.

- Which Agentic Capabilities Are Covered?: Planning, Autonomy, Memory, Tool Use, Orchestration

- Type of Work: System Design

3. System Components & Technical Design

- LLM Backbone: GPT-4, GPT-3.5-turbo

- Agent Framework: AutoGen (custom framework)

- Memory + Retrieval: Vector DBs (e.g., Chroma) integrated via Retrieval-Augmented Generation (RAG)

- Execution / Tool Use: Supports Python code execution, function calling, shell scripting

- Orchestration Logic: Conversable agents with auto-reply mechanism and dynamic control flow

- Infrastructure: Open-source, extensible; supports containerization and external tool integration

- Monitoring / Guardrails: Code execution safety checks, grounding agents

- HITL Interfaces: Human-in-the-loop via UserProxyAgent with configurable interaction levels

4. Analysis Through AIC AI Native Lens 🔍

| AIC Pillar | Score (1–5) | Notes |

|---|---|---|

| Context Layer (MCP + Memory) | 4 | Supports memory through vector DB and history-based context passing |

| Modular Control Plane | 5 | Fully modular agent design with pluggable capabilities |

| Agentic Orchestration | 5 | Robust orchestration via auto-reply and role-based flows |

| A2A Interface Layer (Actionable APIs) | 4 | Function calling and tool use supported but may need richer API schema integration |

| Guardrails & Observability | 4 | Safeguards via agent roles and response validation, though not fully enterprise-hardened |

| AI Native Infra (Cloud/CDK readiness) | 3 | Lacks native AWS/BYO infra bindings but can be containerized |

| Human-AI Feedback Systems | 4 | Strong support via configurable HITL agents and conversational checkpoints |

5. Enterprise Readiness Evaluation 🏢

| Factor | Rating (1–5) | Justification |

|---|---|---|

| Scalability to Prod | 4 | Well-structured, extensible but requires custom infra integration |

| Architecture Clarity | 5 | Highly modular and readable abstraction for agent creation and orchestration |

| Alignment with AWS / Bedrock Ecosystem | 3 | Lacks out-of-the-box AWS CDK/Bedrock integrations |

| Adaptability to Enterprise Tooling | 4 | Easily adaptable via tool-agent pattern but needs enterprise-scale hardening |

| Security & Governance Integration | 3 | Basic safeguards, requires further extensions for enterprise security needs |

6. Agentic AI Maturity Verdict 🚦

- Verdict Label: ⚙️ Modular Proof-of-Concept

- Rationale: Strong foundation with practical usability, missing native enterprise infra support and full lifecycle governance.

7. Final Commentary: From Research to Real-World AIC

- Key Takeaways:

- Enables modular, reusable agent design for LLM workflows

- Simplifies complex control flows through conversation programming

- Demonstrates performance advantages over existing baselines in tasks like math solving, RAG, and code generation

- How it Inspires AIC Use Cases:

- Autonomous Tech Support Agents

- Retrieval-Augmented Code Assistants

- Conversational Decision Agents in enterprise ops

- What It’s Missing: Native enterprise cloud bindings, richer security/governance hooks

- Feature Suggestion: Add CDK module and monitoring/telemetry pipeline for enterprise deployment

- Image Idea: Diagram showing multiple agents (LLM, human, tool) conversing through a central AutoGen orchestrator with feedback loops and execution trails